I thought I would share this story… it’s another story of “it’s the network’s fault” when in reality it really has nothing to-do with the network but it falls to the network engineers and consultants to prove the point beyond a reasonable doubt.

I can’t tell you how it irks me to hear people say “it’s the networks fault” when they have absolutely no clue as to how anything works and have no data to support their wild claims. I would think a lot more of them if they just said, “I’m sorry, I haven’t got a frigging clue what’s happening here but can you help me?” And of course the problem always needs to be resolved yesterday as if the building itself was on fire.

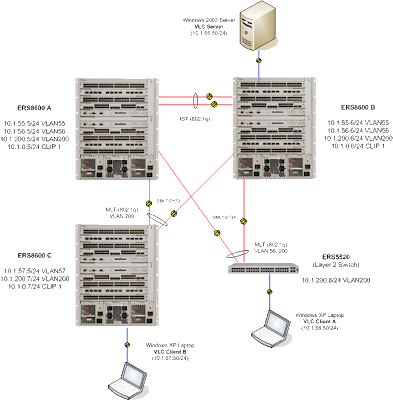

We have multiple Avaya Aura Contact Center (formerly Nortel Symposium) installations. At one of these locations we began receiving trouble tickets that the Agent Desktop Display (ADD) which is a small software application that listens to a Multicast stream and displays a ticker tape banner showing the contact center queue details was quietly closing after only a few minutes of running on the local desktop/laptop. The local telecom technician verified that the problem only occurred on a specific floor, the users on the other floors had no issues or problems. A quick check of the core Avaya Ethernet Routing Switch 8600 and edge Avaya Ethernet Routing Switch 5520s indicated that IGMP and PIM were configured and working properly.

Note:A few years back now I detailed how to configure IGMP, DVMRP and PIM for Multicast routing.

I asked the local telecom technician to perform a packet trace so I could see what was happening on the wire. The packet trace indicated that the desktop/laptop was issuing an IGMP leave request and was closing the HTTP/TCP socket it had open to the web server so that was proof enough for me that the application was silently crashing and the operating system was cleaning up all the open ports and IGMP sessions.

6376 2013-05-16 07:47:46.052281 10.1.46.144 10.1.38.55 TCP 54 3317 > 80 [RST, ACK] Seq=4467 Ack=10430 Win=0 Len=0 6377 2013-05-16 07:47:46.052595 10.1.46.144 224.0.0.2 IGMPv2 46 Leave Group 230.0.0.2

The actual Multicast stream from the application/web server was fine;

6353 2013-05-16 07:47:43.995183 10.1.38.55 230.0.0.2 UDP 511 Source port: 1031 Destination port: 7040 6354 2013-05-16 07:47:43.995502 10.1.38.55 230.0.0.2 UDP 502 Source port: 1025 Destination port: 7050 6355 2013-05-16 07:47:43.995885 10.1.38.55 230.0.0.2 UDP 813 Source port: 1026 Destination port: 7030 6356 2013-05-16 07:47:43.996301 10.1.38.55 230.0.0.2 UDP 860 Source port: 1032 Destination port: 7020 6357 2013-05-16 07:47:43.996505 10.1.38.55 230.0.0.2 UDP 343 Source port: 1033 Destination port: 7060 6358 2013-05-16 07:47:43.996726 10.1.38.55 230.0.0.2 UDP 331 Source port: 1027 Destination port: 7070 6359 2013-05-16 07:47:43.996886 10.1.38.55 230.0.0.2 UDP 153 Source port: 1028 Destination port: 7110 6360 2013-05-16 07:47:43.997048 10.1.38.55 230.0.0.2 UDP 153 Source port: 1034 Destination port: 7100 6361 2013-05-16 07:47:43.997199 10.1.38.55 230.0.0.2 UDP 135 Source port: 1030 Destination port: 7090 6362 2013-05-16 07:47:43.997371 10.1.38.55 230.0.0.2 UDP 135 Source port: 1036 Destination port: 7080 6363 2013-05-16 07:47:43.997525 10.1.38.55 230.0.0.2 UDP 127 Source port: 1035 Destination port: 7120 6364 2013-05-16 07:47:43.997647 10.1.38.55 230.0.0.2 UDP 127 Source port: 1029 Destination port: 7130

The packet trace did show some odd UDP broadcast traffic from one specific desktop that happen to be running GE’s Centricity Perinatal (CPN). This is a software application used to monitor Labor & Delivery, the Nursery and the NICU. We use it to actually monitor, chart and graph the strips put out by the fetal monitors. There’s a software component of the GE CPN solution called B-Relay which is the piece of software that floods the VLAN with all those UDP broadcasts. Unfortunately this UDP flooding is by design and is required for the application to function properly.

6205 2013-05-16 07:47:26.710685 10.1.47.210 10.1.47.255 UDP 251 Source port: 1759 Destination port: 7005 6206 2013-05-16 07:47:26.853810 10.1.47.210 10.1.47.255 UDP 822 Source port: 1760 Destination port: 7043 6211 2013-05-16 07:47:28.215486 10.1.47.210 10.1.47.255 UDP 60 Source port: 1783 Destination port: 7013

Looking at the packet traces I quickly noticed that while there are multiple destination ports they are overlapping between 7001 and 7999. I would theorize that the GE CPN software was eventually hitting a UDP port that the ADD software was listening on and since it was a broadcast packet it tried to process the data and was quietly choking and crashing. I shutdown the Ethernet port connecting the GE CPN desktop and had the local telecom technician run his test again. He called back about 30 minutes later to let me know that everything was working fine and that whatever I had done had fixed the problem. Well it wasn’t really fixed because now I had to figure out how to get both applications to co-exist.

The solution was to isolate the GE CPN desktops to their own VLAN so that the UDP broadcasts wouldn’t hit the closet VLAN where the Contact Center users resided. Another possible solution might have been to try and change the UDP ports that either GE CPN or Avaya ADD software was using but that change would have probably taken weeks if not months. I was able to spin up a new VLAN in about 30 minutes and get everyone back up and running again.

Have you got a story to share? I’d love to hear it!

Cheers!

There was yet another question recently on the

There was yet another question recently on the