It was just over 2 years ago that I designed and stood up our first off-campus data center in Philadelphia, PA. Since that time we’ve completely vacated our original data center migrating all the servers, applications and services out to our new data center. Last month we relocated our offices leaving the old data center and office space behind forever. The new office space is very nice and has a lot of (very needed) conference rooms all of which have built-in audio/video capabilities with either an over-head projector or flat screen TV. I’m still hoping to have a LAN party someday on those 61″ monster displays perhaps with Call of Duty: Black Ops 2?

It was just over 2 years ago that I designed and stood up our first off-campus data center in Philadelphia, PA. Since that time we’ve completely vacated our original data center migrating all the servers, applications and services out to our new data center. Last month we relocated our offices leaving the old data center and office space behind forever. The new office space is very nice and has a lot of (very needed) conference rooms all of which have built-in audio/video capabilities with either an over-head projector or flat screen TV. I’m still hoping to have a LAN party someday on those 61″ monster displays perhaps with Call of Duty: Black Ops 2?

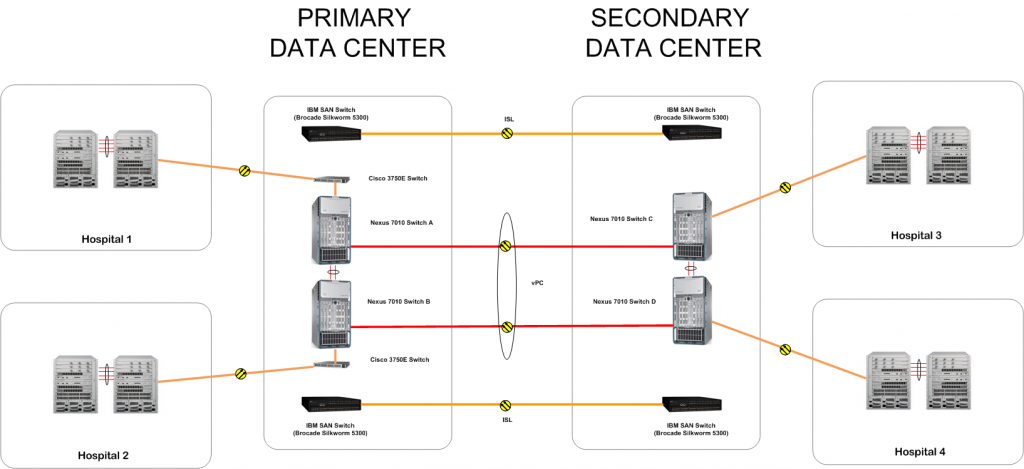

In June we started deploying our secondary data center with the intent of providing our own business continuity and disaster recovery services for our tier 1 applications including all our data storage needs. The design allows us the flexibility to utilize both DCs in an active/active configuration with the ability to move workloads (virtual machines) between DCs. While the design allows us that option we’re still testing how we’re going to handle all the different disaster scenarios – blade, enclosure, rack, SAN, cage, entire data center, etc. While our primary data center rings in at 800 sq ft our secondary data center is only 300 sq ft. This is possible because we’re utilizing a traditional disaster recovery model for our big box non-tier 1 applications that for one reason or another aren’t virtualized. This helps reduce the number of lazy assets hanging around and helps control some of the budget numbers. I totally expect the number of big box applications to continue to shrink over time as more and more application vendors embrace virtualization.

We’ve had pretty good success with the design of our first data center so we only made a few corrections. There’s a lot of logistics that need to be considered in any design especially around all the power and cooling requirements.

The Equipment

What equipment did we use? We already deployed Cisco at our primary data center so we decided to stay with Cisco at our secondary data center.

- Cisco Nexus 7010

- Cisco Nexus 5010

- Cisco Nexus 2248

- Cisco Nexus 1000V

- Cisco Catalyst 3750X

- Cisco Catalyst 2960G

- Cisco ASA5520

- Cisco ACE 4710

- Cisco 3945 Router (Internet)

- Cisco 2811 Router (internal T1 locations)

What racks did we use for the network equipment?

- Liebert Knurr Racks

- Liebert MPH/MPX PDUs

What equipment did we use for the servers/blades?

- HP Rack 10000 G2

- HP Rack PDU (AF503A)

- HP IP KVM Console (AF601A)

- HP BladeSystem C7000 Enclosure

- HP Virtual Connect Flex-10 Interconnect

- HP SAN 8Gb Interconnect

- Cisco Catalyst 3120X

- HP BL460c G7

- HP BL620c G7

- HP DL380 G8

- HP DL360 G8

What are we using for storage?

- IBM XIV System Storage Gen3 (SAN) (w/4 1Gbps iSCSI replication ports)

- IBM SAN80B-4 SAN Switch

- EMC DD860 (Disk-Disk backup via Symantec NetBackup)

Additional miscellaneous equipment;

- MRV LX-4048T (terminal server)

We had some challenges with designing our secondary data center due to the density of our equipment. We had to stay under the maximum kw per sq foot load that the room (data center) was designed to handle. This is a simple calculation based on the kW utilization of the equipment to determine if there is adequate power and cooling available to meet that demand. We also had to maintain a N+1 design so we really can’t consuming more than 40% of our capacity leaving 10% for reserve. While some vendors charge a flat fee for the space (includes power) others charge per kWh so it’s very important to understand what type of demand you’re going to be placing on the data center.

My Design

We stood up a pair of Ciena 5200s from Zayo (formerly AboveNet) providing us a DWDM ring with 4 wavelengths between our primary data center and secondary data center . We’re using 2 wavelengths for the IP network between 2 pairs of Cisco Nexus 7010s and 2 wavelengths for the SAN fiber channel network between 2 pair of IBM SAN switches. We have the option of adding upwards of 4 additional wavelengths before we need to add any hardware so we have room for growth. The 4 wavelengths are diverse between an east and west path but they are not protected so it’s up to the higher layer protocols to provide the redundancy and failover. Not visible in the diagram above is a 10GE WAN ring that connects all our hospitals together. The primary and secondary data centers are also tied into that ring via multiple peering points for redundancy. You might be asking yourself why I’m using a Cisco 3750E as a termination switch in our primary data center. At the time we deployed our Cisco Nexus 7010s they didn’t support the 10GBase-ER SFP+ optic so I had to use the Cisco 3750E (with RPSU) as a glorified media transceiver/converter from 10GBase-ER to 10GBase-SR. The Cisco Nexus 7010 now has a 10GBase-ER SFP+ optic available so we didn’t need to use the Cisco 3750 in the secondary data center.

Not visible in the diagram above is a 10GE WAN ring that connects all our hospitals together. The primary and secondary data centers are also tied into that ring via multiple peering points for redundancy. You might be asking yourself why I’m using a Cisco 3750E as a termination switch in our primary data center. At the time we deployed our Cisco Nexus 7010s they didn’t support the 10GBase-ER SFP+ optic so I had to use the Cisco 3750E (with RPSU) as a glorified media transceiver/converter from 10GBase-ER to 10GBase-SR. The Cisco Nexus 7010 now has a 10GBase-ER SFP+ optic available so we didn’t need to use the Cisco 3750 in the secondary data center.

We are essentially stretching a Layer 2 vPC connection between the 2 data centers. It’s possible that some folks will get excited at the mention of Layer 2 between the data centers but it’s the best solution for us at this time and it certainly has pros and cons like everything in networking. We looked at potentially running OTV between the Cisco Nexus 7010s but ultimately decided to use a vPC configuration. We are only stretching the virtual machine VLANs that we need between the data centers.

My Thoughts

There’s a lot of work required to design any data center or even an ICR (Intermediate Communications Room), CCR (Central Communications Room), MDF (Main Distribution Frame) or IDF (Intermediate Distribution Frame). You’re immediately confronted with space, power and cooling challenges never mind coming up with the actual IP addressing scheme, VLAN assignments, routing vs bridging ,etc. You need to determine how much cabling you’ll need both CAT6 and fiber, perhaps you’ll look to use twinax of DAC (Direct Attach Copper) for your 10GE connections. Let’s not forget to include the ladder racks, basket trays, fiber conduits, PDUs, out-of-band networking, etc.

You also need to design the data center as if it was 300+ miles away… license those iLOs (HP Integrated Lights Out), purchase IP enabled KVMs, purchase console/terminal servers (Opengear or MRV) and wire everything up as if you will never have the opportunity to visit it again. We’ve had a few issues in the past few years that were quickly (less than 15 minutes) resolved thanks to having all our iLOs licensed, all our KVMs IP enabled, all our console/serial ports connected to a console/terminal server and the ability to dial-up into the console/terminal server should the problem get really bad.

Here’s a short story… We had a number of billing issues in the first few months of our contract with our current primary data center provider and the data from our Liebert PDUs, HP PDUs, and HP C7000 enclosures was invaluable in calling into question the numbers that were being reported to us. In all honesty when they told me we were consuming 53A on a 50A circuit I knew that something was grossly wrong with their math. In the end the provider admitted that there numbers were grossly wrong and the corrected numbers were in-line with the data we collected from our equipment.

It’s never a good idea to skimp on the documentation and I really advise taking lots of pictures, you’d be surprised how quickly you can forget what the back a specific rack looks like when you’re trying to walk Smart Hands through replacing a component at 2AM in the morning.

Cheers!