In my latest adventure I had to untangle the interaction between a pair of Cisco ACE 4710s and Akamai’s Content Distribution Network (CDN) including SiteShield, Mointpoint, and SiteSpect. It’s truly amazing how complex and almost convoluted a CDN can make any website. Any when it fails you can guess who’s going to get the blame. Over the past few weeks I’ve been looking at a very interesting problem where an Internet facing VIP was experiencing a very unbalanced distribution across the real servers in the severfarm. I wrote a few quick and dirty Bash shell scripts to-do some repeated load tests utilizing curl and sure enough I was able to confirm that there was something amiss between the CDN and the LB. If I tested against the origin VIP I had near perfect round-robin load-balancing across the real servers in the VIP, if I tested against the CDN I would get very uneven load-balancing results.

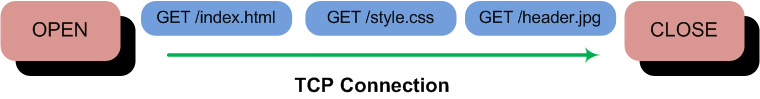

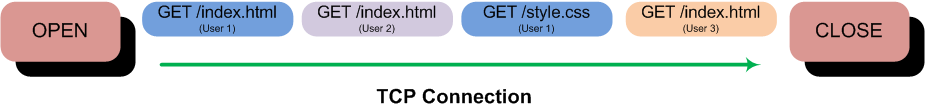

When a web browser opens a connection to a web server it will generally send multiple requests across a single TCP connection similar to the figure below. Occasionally some browsers will even utilize HTTP pipelining if both the server and browser support that feature, sending multiple requests without waiting for the corresponding TCP acknowledgement.

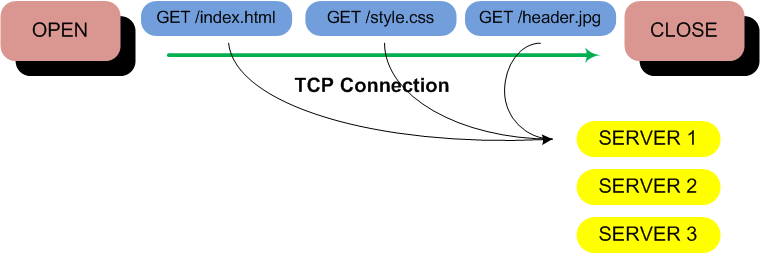

The majority of load balancers, including the Cisco ACE 4710 and the A10 AX ADC/Thunder, will look at the first request in the TCP connection and apply the load-balancing metric and forward the traffic to a specific real server in the VIP. In order to speed the processing of future requests the load balancer will forward all traffic in that connection to the same real server in the VIP. This generally isn’t a problem if there’s only a single user associated with a TCP connection.

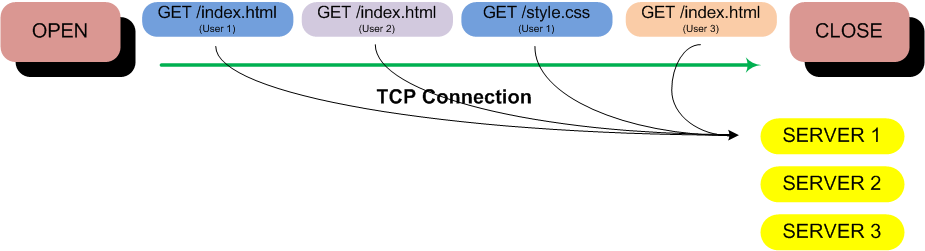

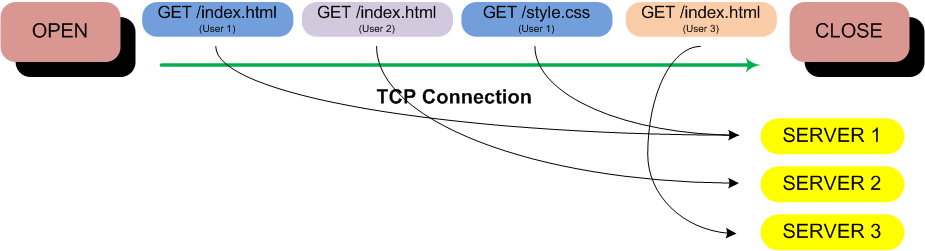

Akamai will attempt to optimize the number of TCP connections from their edge servers to your origin web servers by sending multiple requests from different users all over the same TCP connection. In the example below there are requests from three different users but it’s been my experience that you could see requests for dozens or even hundreds of users across the same TCP connection.

And here lies the problem, the load balancer will only evaluate the first request in the TCP connection, all subsequent requests will be sent to the same real server leaving some servers over utilized and others under utilized.

Thankfully there are configuration options in the majority of load balancers to work around this problem and instruct the load balancer to evaluate all requests in the TCP connection independently.

A10 AX ADC/Thunder

strict-transaction-switch

Cisco ACE 4710

parameter-map type http HTTP_PARAMETER_MAP persistence-rebalance strict

With the configuration change made now every request in the TCP connection is evaluated and load-balanced independently resulting in a more even distribution across the real servers in the farm.

In this scenario I’m using HTTP cookies to provide session persistence and ‘stickiness’ for the user sessions. If your application is stateless then you don’t really need to worry that a user lands on the same real server for each and every request.

Cheers!

Image Credit: topfer