We have a number of Survivable Remote Gateways (SRG) across our network providing local PSTN and E-911 access for our smaller IP telephony deployments. We recently went about applying a number of cumulative updates and ran into a few different problems.

We have a number of Survivable Remote Gateways (SRG) across our network providing local PSTN and E-911 access for our smaller IP telephony deployments. We recently went about applying a number of cumulative updates and ran into a few different problems.

Thankfully both of the issues we experienced had already been documented by Nortel as a known issue.

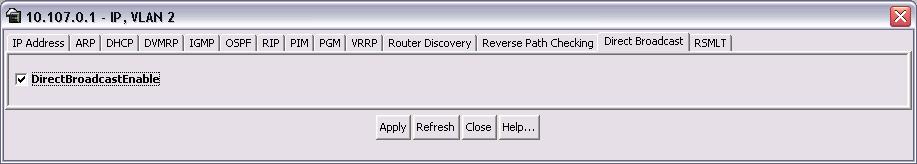

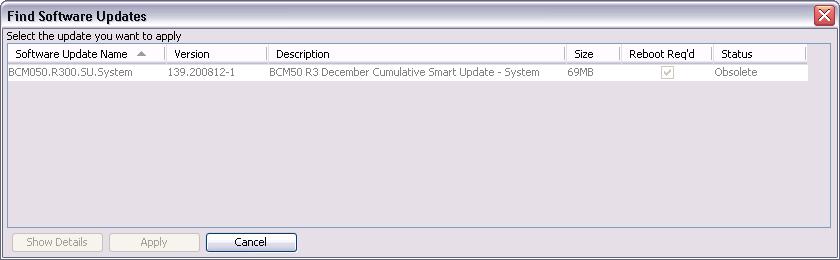

We had an SRG50 that had been upgraded from 1.0 to 3.0 and had the December 2008 Desktop update (BCM050.R300.SU.Desktop-140.200812-1.zip) applied but when we would try to apply the December 2008 System update (BCM050.R300.SU.System-139.200812-1.zip) through Nortel’s Business Element Manager the software would tell us that the update we were trying to apply was obsolete (see figure below). In the past Nortel would release dozens of different patches for the different components in the SRG/BCM platform. Thankfully they started releasing cumulative updates which greatly simplifies the management and patching of the SRGs and BCMs.

Thankfully this problem had been documented by Nortel and was listed in a support document entitled Business Communication Manager (BCM) / Survivable Remote Gateway (SRG) /BCM50 / BCM450 Top Issues. Here’s an excerpt of the document that concerns this specific problem;

ISSUE: Inability to apply any update numbered over 1xx, shows Obsolete. Issue will manifest on BCM50 R3 systems with any of the following SU System Updates applied as last update:

– BCM050.R300.SU.System-21.200801

– BCM050.R300.SU.System-25.200802

– BCM050.R300.SU.System-28.200802

RELEASE: BCM50 R3 or any BCM50 hardware loaded with R3 software (6.x)

CR: NONE

SYMPTOMS: Inability to apply any update numbered over 1xx, shows Obsolete.

WORKAROUND: Apply required update BCM050.R300.SOFTWARE-MANAGEMENT-63 or greater.

STATUS: Update BCM050.R300.SOFTWARE-MANAGEMENT-63 is now bound into BCM50 R3 System SU 200901 for application. Details regarding this dependency are imbedded into SU Update Release Notes. BCM050.R300.SOFTWARE-MANAGEMENT-63 is also generally available and can be downloaded as a stand alone update from Nortel.com.

RECOMMENDATIONS: Apply required update BCM050.R300.SOFTWARE-MANAGEMENT-63 or greater to any systems that match this particular profile. **

Once we loaded BCM050.R300.SOFTWARE-MANAGEMENT-63 into the SRG50 we were able to load and apply the cumulative system update for December 2008.

We also had a second problem where a brand new SRG50 that wouldn’t accept any new software updates after we installed the December 2008 System update (BCM050.R300.SU.System-139.200812-1.zip). Nothing would happen when you clicked on the “Get New Updates” button within Business Element Manager. We tried running Nortel’s Business Element Manager from multiple desktops and laptops but got the same symptom which left us stumped. Again thankfully this problem already been documented by Nortel.

ISSUE: Unable to apply further patches after applying BCM050.R300.SU.System-139.2008012. This is found on new

BCM50 R3 installations and might not surface if there are patches already installed on the system.

RELEASE: BCM50 R3

CR: Q01975339

SYMPTOMS: Unable to apply any more patches.

WORKAROUND: Download the latest Element Manager from www.nortel.com and install.

STATUS: Design is discussing best option to resolve/avoid this issue on future SUs .

RECOMMENDATIONS: Please install the BCM50 R3 SUs just released in January:

BCM050.R300.SU.Desktop-152.200901

BCM050.R300.SU.System-151.200901

We downloaded Business Element Manager v2.0.300-0 from Nortel’s website and upgraded our current installation after which we were able to apply the remaining patches. I’m not sure how the version numbers work with Element Manager but after installing v2.0.300-0 the Help -> About screen showed it was v1.05.0.

Cheers!

We’re preparing to deploying 300+ i2002/i2004 IP telephones over the next few weeks. In preparation for this deployment we decided to upgrade the current IP phone firmware from 0604DBG to 0604DCG. The site has a Nortel Succession 1000M Call Server with 3 Succession Remote Gateway (SRG) 50s providing local PSTN and E-911 services at three remote facilities. We have done this dozens of times in multiple locations and never really had an issue (except when ‘

We’re preparing to deploying 300+ i2002/i2004 IP telephones over the next few weeks. In preparation for this deployment we decided to upgrade the current IP phone firmware from 0604DBG to 0604DCG. The site has a Nortel Succession 1000M Call Server with 3 Succession Remote Gateway (SRG) 50s providing local PSTN and E-911 services at three remote facilities. We have done this dozens of times in multiple locations and never really had an issue (except when ‘